Abstract

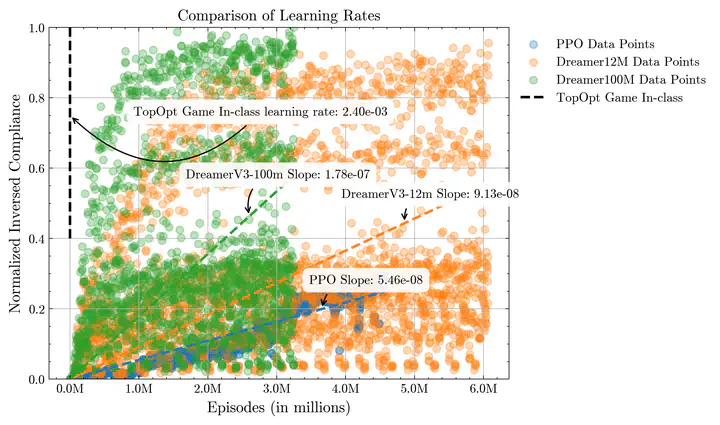

This paper introduces the Structural Optimization gym (SOgym), a novel open-source reinforcement learning environment designed to advance the application of machine learning in topology optimization. SOgym aims for RL agents to learn to generate physically viable and structurally robust designs by integrating the physics of TO directly into the reward function. To enhance scalability, SOgym leverages feature mapping methods as a mesh-independent interface between the environment and the agent, allowing for efficient interaction with the design variables regardless of the mesh resolution. Baseline results are presented using a model-free proximal policy optimization agent and a model-based DreamerV3 agent. Three observation space configurations were tested. The TopOpt game inspired configuration, an interactive educational tool that improves students’ intuition in designing structures to minimize compliance under volume constraints, performed best in terms of performance and sample efficiency. The 100M parameter version of DreamerV3 produced structures within 54% of the baseline compliance achieved by traditional optimization methods as well as a 0% disconnection rate, an improvement over supervised learning approaches that often struggle with disconnected load paths. When comparing the learning rates of the agents to those of engineering students from the TopOpt game experiment, the DreamerV3-100M model shows a learning rate approximately four orders of magnitude lower, an impressive feat for a policy trained from scratch through trial and error. These results suggest RL’s potential to solve continuous TO problems and its capacity to explore and learn from diverse design solutions. SOgym provides a platform for developing RL agents for complex structural design challenges and is publicly available to support further research in the field.